-

Timeline:

1.5 weeks (March 16th - 25th 2022)

-

Project Type

Solo, conceptual

-

Contributions:

Full scope (User interviews, affinity map, sketches, wireframes and prototype)

-

Tools used:

Lucid chart, Marvel app, Figma

Project Context

Task: To Design a platform that utilizes AR technology to improve the usability of a mobile application.

Outcome

A prototype for a mobile app that uses AR to make restaurant search convenient and quick while on the go. The app uses the user’s phone camera to point them in the direction of restaurants around them and give them a quick view of the inside of the restaurant along with reviews.

-

Empathize

User Research

-

Define

Problem Statement and How Might We

-

Ideate

Sketching

-

Prototype

Wireframing and Prototyping

-

Test

Testing and Iterating

Empathize

User Research

Research method: User interviews

Goal: To discover how people go about finding new restaurants and uncover any pain points they experience along the way

User Interviews

The first two days of this project, I spent gathering users to interview. I interviewed 10 people by the end of the first week of the project, all of who had very particular but different opinions on trying new restaurants.

Here are some of the problems expressed by users:

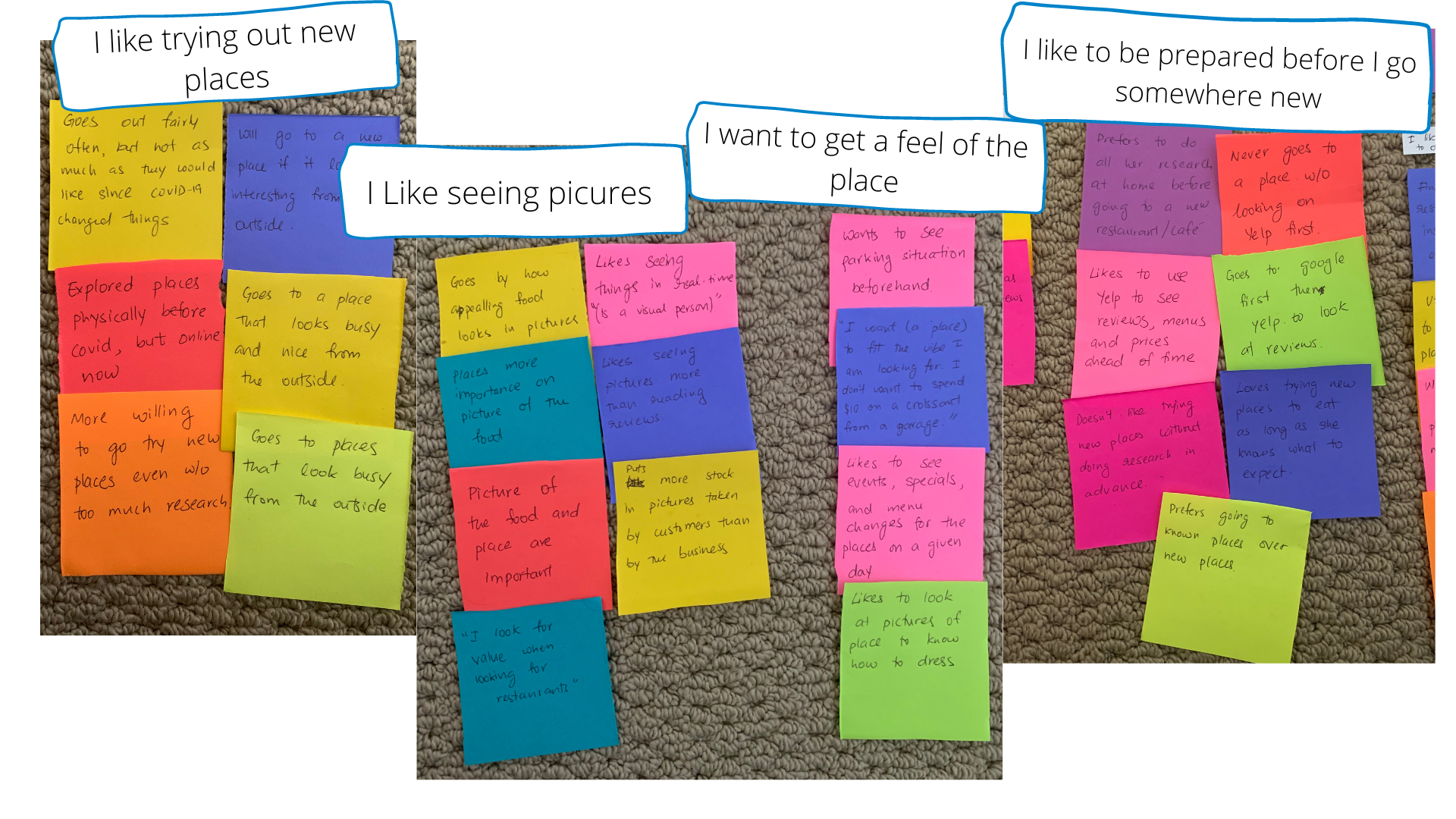

Affinity Mapping

After finishing up the interviews, I started to put individual insights from each interview onto post-its to start grouping them according to the trends I was noticing.

Here are the main trends I found in the form of “I” statements;

Define

Problem Statement

Based on the problem statement, I arrived at this problem statement.

Users want a convenient way to search for restaurants near them so that they can quickly explore restaurants in their area

So, How might we make restaurant search more interactive and quick while users are on the go?

Ideate

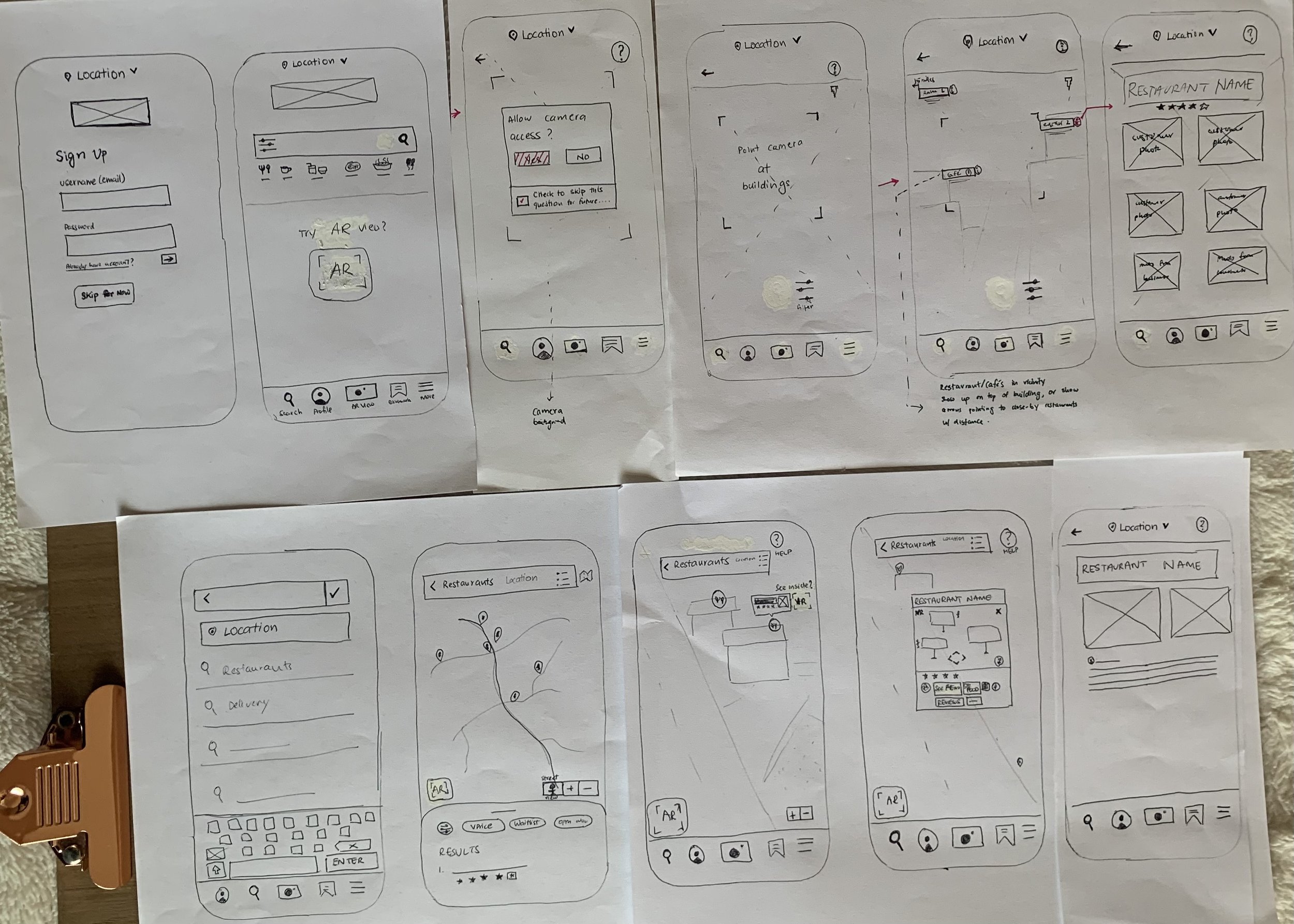

Sketching

After the research phase, I sketched out my ideas for solving the user problems I highlighted above. In this phase, I looked at the applications users mentioned that they mainly used in their searches: such as Yelp, Google maps, and Doordash to get a better idea of what features to include in my app.

Initial Usability Testing

The sketches above are ones that I finalized after some trials and errors.

I tested the initial sketches I made using Marvel app, and realized I had to make quite a few changes to make the experience intuitive to the user.

These are the problems I uncovered through this initial testing: (1) users were unclear on how to get to the AR screen from the homepage, and (2) users who were new to AR did not know what to do with the camera view in the app.

The homepage of the app was a little busy, which made it a little hard for users to understand, especially in sketch form. While making the final flow for the app, I made sure to solve the problems I uncovered during this initial testing.

User Flow

This is the final User flow I implemented for the prototype I created. From the homepage, there are two ways to get to the AR screen: One is through a map, and the other one is directly from a camera icon on the homepage that will guide the user through using the AR camera.

The app will then take the user through onboarding to explain how to use it for new users - which I realized was especially essential for this app based on my initial usability testing.

Finally, the app will lead the user to open their camera and point towards the street they are on (when outside) and will display which buildings are restaurants on the street along with reviews and an inside view when zoomed in.

Prototype

Wireframing

Based on the things I observed during the initial usability testing and the final user flow I made, I started building out wireframes on Figma during the second week of the project.

Home page to Map search Screen

Onboarding Screens

I added the onboarding while making the wireframes, and made the AR view easier to understand by adding a sample of what the user’s view would look like through the camera.

AR camera screens

Low-fidelity Prototype

Here is a walkthrough of the final low-fidelity prototype.

Test

Usability Testing

The last two days before my final presentation for this project, I worked on testing the prototype I made with three users.

Scenario: You are in walking around in a new city and trying to find a place to eat; try to use the Tablez app to help you find a new restaurant.

Task: Sign up for the app as a new user, and look for a restaurant using the AR feature.

Results: Users were able to navigate the app fairly easily this time around, barring a few minor roadblocks. These were;

They didn’t understand how to zoom in to see the inside, as was mentioned in the instructions.

The close button and the back on the AR view page were not functioning.

The phone camera area hides the location finder at the top of the screen.

The changes based on these observations were implemented into the final prototype shown above.

Next Steps

If I were to move forward with this app;

I would make the save restaurants feature functional and add the ability to filter restaurants by cuisine

I would add the option to see a virtual view of the area from home by zooming in on the map.

To read more about this project, read my medium article on this project.